UPDATED ON: December 11, 2014

Web Audio API Basics is a tutorial that will give you a basic understanding of the Web Audio API. It’s part of a series that will start you off on your Web Audio adventures. Web Audio API Basics will attempt to cover what the Web Audio API is, the concept of audio signal routing, and different types of audio nodes. We’ll finish by applying what we’ve learned to create a working example. After covering the basics in this tutorial, we’ll get into some more advanced techniques and even how to use the API to make music in your browser.

What is the Web Audio API

You may have heard that The Web Audio API is the coolest thing since sliced bread. It’s true! For years, we’ve been severely limited in our ability to control and manipulate sound. Finally a ray of light appeared, and the HTML5 audio element brought us out of the dark ages. And now another giant step towards revolutionizing the way we think about audio on the web.

The Web Audio API is a high-level JavaScript Application Programming Interface (API) that can be used for processing and synthesizing audio in web applications. The audio processing is actually handled by Assembly/C/C++ code within the browser, but the API allows us to control it with JavaScript. This opens up a whole new world of possibilities. Before we jump into it though, lets make sure we’re prepared for the journey.

A Web Server is Required

In order to follow along with this tutorial, you will need a working web server such as WAMP (or MAMP) which is an acronym for Windows(or Mac) Apache MySQL and PHP. This software can be downloaded for free from the links below. Using WAMP (or MAMP) allows you to emulate a web server on your computer and thus enable audio files to play back using the Web Audio API.

Download WAMP here if you’re a Windows user.

Download MAMP here if you’re a Mac user.

After downloading, install the package and start the server. Then create a new directory called “tutorials” inside the “www” directory that was installed with WAMP (or MAMP). Open your favorite code editor and create a new document. Copy the following code and paste it into your new document.

<!DOCTYPE html> <html> <h1>Web Audio API Basics Tutorial</h1> <script> </script> </html>

Save this file as “webaudioapi-basics.html” in the “tutorials” directory. This will be the template we use to experiment with various features of the Web Audio API. The code snippets should be placed between the script tags. Okay, lets get back into it!

Audio Signal Routing

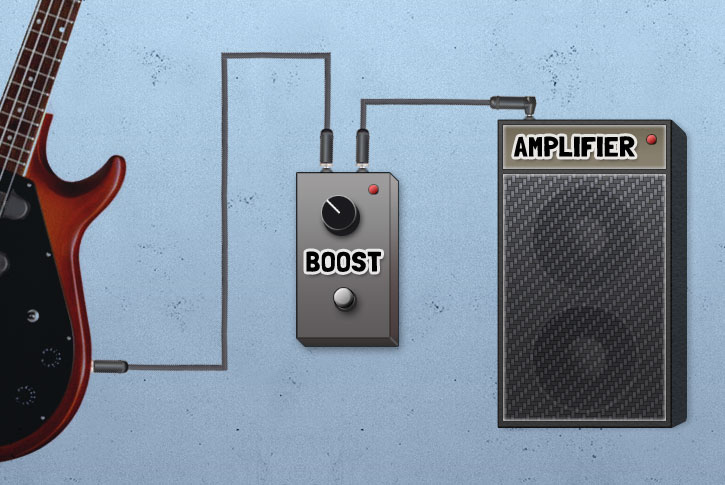

Understanding the audio signal graph is essential to using the Web Audio API. The audio signal graph exists within a container (AudioContext), and is made up of nodes(AudioNodes) dynamically connected together in a modular fashion. This allows arbitrary connections between different nodes. We can make a simplified analogy to a bass guitar player. The bass guitar is plugged into a boost pedal which is then plugged into an amplifier.

When a note is played, the signal travels from the bass (AudioSourceNode) through an instrument cable into the boost pedal (GainNode). The signal then travels through another instrument cable to the amplifier, where the sound is projected through the amp’s speakers (AudioDestinationNode).

An audio signal can travel through any amount of nodes as long as it originates from a source node and terminates at a destination node. If the patch cables are connected properly, we can add as many pedals (AudioNodes) to the chain as desired.

Audio Context

In order to use the Web Audio API, we must first create a container. This container is called an AudioContext. It holds everything else inside it. Keep in mind that only one AudioContext is usually needed. Any number of sounds can be loaded inside a single AudioContext. The following snippet creates a simple AudioContext.

var context = new AudioContext(); // Create audio container

UPDATE: As of July 2014, the “webkit” prefix is no longer needed or recommended.

As of this writing, Web Audio API only works in WebKit-based browsers such as Chrome and Safari. Therefore, the use of the “webkit” prefix is recommended. Mozilla is working on implementing Web Audio API into Firefox, so hopefully we’ll see wider browser support soon.

Audio Source

An AudioSourceNode is an interface representing an audio source, an AudioNode which has no inputs and a single output. Threre are basically two kinds of audio source nodes. An oscillator can be used to generate sound from scratch, or audio files (wav, mp3, ogg, etc.) can be loaded and played back. In this tutorial (Web Audio API Basics), we’ll focus on generating our own sounds.

Oscillators

To generate your own repeating wave forms, first create an oscillator. Then connect it to a destination, and tell it when to play. It’s that easy!

oscillator = context.createOscillator(); // Create sound source

oscillator.connect(context.destination); // Connect sound to speakers oscillator.start(); // Generate sound instantly

UPDATE: As of July 2014, oscillator.start() should be used instead of oscillator.noteOn(0).

Audio Files

We can also use data from audio files with the Web Audio API. This is done with the help of a buffer and an XMLHttpRequest. We’ll get into that more in future tutorials. For now, just be aware that it’s possible.

Audio Destination

The AudioDestinationNode is an AudioNode representing the final audio destination. Consider it as an output to the speakers. This is the sound that the user will actually hear. There should be only one AudioDestinationNode per AudioContext. An audio source must be connected to a destination to be audible. That seems logical enough.

oscillator.connect(context.destination); // Connect sound to speakers

Intermediate Audio Nodes

Besides audio sources and the audio destination, there are many other types of AudioNodes that act as intermediate processing modules we can route our signal through. The following is a list of some audio nodes, and what they do.

- GainNode multiplies the input audio signal

- DelayNode delays the incoming audio signal

- AudioBufferNode is a memory-resident audio asset for short audio clips

- PannerNode positions an incoming audio stream in three-dimensional space

- ConvolverNode applies a linear convolution effect given an impulse response

- DynamicsCompressorNode implements a dynamics compression effect

- BiQuadFilterNode implements very common low-order filters

- WaveshaperNode implements non-linear distortion effects

We can adjust the volume of the sound with a GainNode. Create the GainNode the same way the oscillator was created, by declaring a variable and assigning it to the appropriate interface within the AudioContext. The default gain value is one, which will pass the input through to the output unchanged. So, we set the gain value to 0.3 to decrease the volume to thirty percent. Feel free to modify this number, and reload the script to hear the difference.

gainNode = context.createGain(); // Create gain node gainNode.gain.value = 0.3; // Set gain node to 30 percent volume

UPDATE: As of July 2014, createGain should be used instead of createGainNode.

If a new node is added between the source and destination, the source must connect to the node, and the node must connect to the destination. Just like the boost pedal in our bass player analogy.

oscillator.connect(gainNode); // Connect sound to gain node gainNode.connect(context.destination); // Connect gain node to speakers

Final Code

To wrap things up, we’ll combine what we’ve learned so far into a working example. First we create an audio container. Inside the container, we create a bass guitar and a boost pedal. We connect the bass to the boost pedal. We connect the boost pedal to the amplifier. We turn the volume down to 30% on the pedal and play the bass instantly. The code below corresponds to the bass player analogy.

var context = new AudioContext(); // Create audio container

oscillator = context.createOscillator(); // Create bass guitar

gainNode = context.createGain(); // Create boost pedal

oscillator.connect(gainNode); // Connect bass guitar to boost pedal

gainNode.connect(context.destination); // Connect boost pedal to amplifier

gainNode.gain.value = 0.3; // Set boost pedal to 30 percent volume

oscillator.start(); // Play bass guitar instantly

Well, that’s about it for Web Audio API Basics. I’m ready to explore the outer limits of imagination. Are you? The possibilities are endless. How are you going to use the Web Audio API to make the web awesome?

Other tutorials in this series

- Web Audio API Oscillators

- Web Audio API Audio Buffer

- Controlling Web Audio API Oscillators

- Play a Sound with Web Audio API

- Web Audio API BufferLoader

- Timed Rhythms with Web Audio API and JavaScript

Leave a Reply